Research Topics

Topic 1: Distributed Control of Network Systems

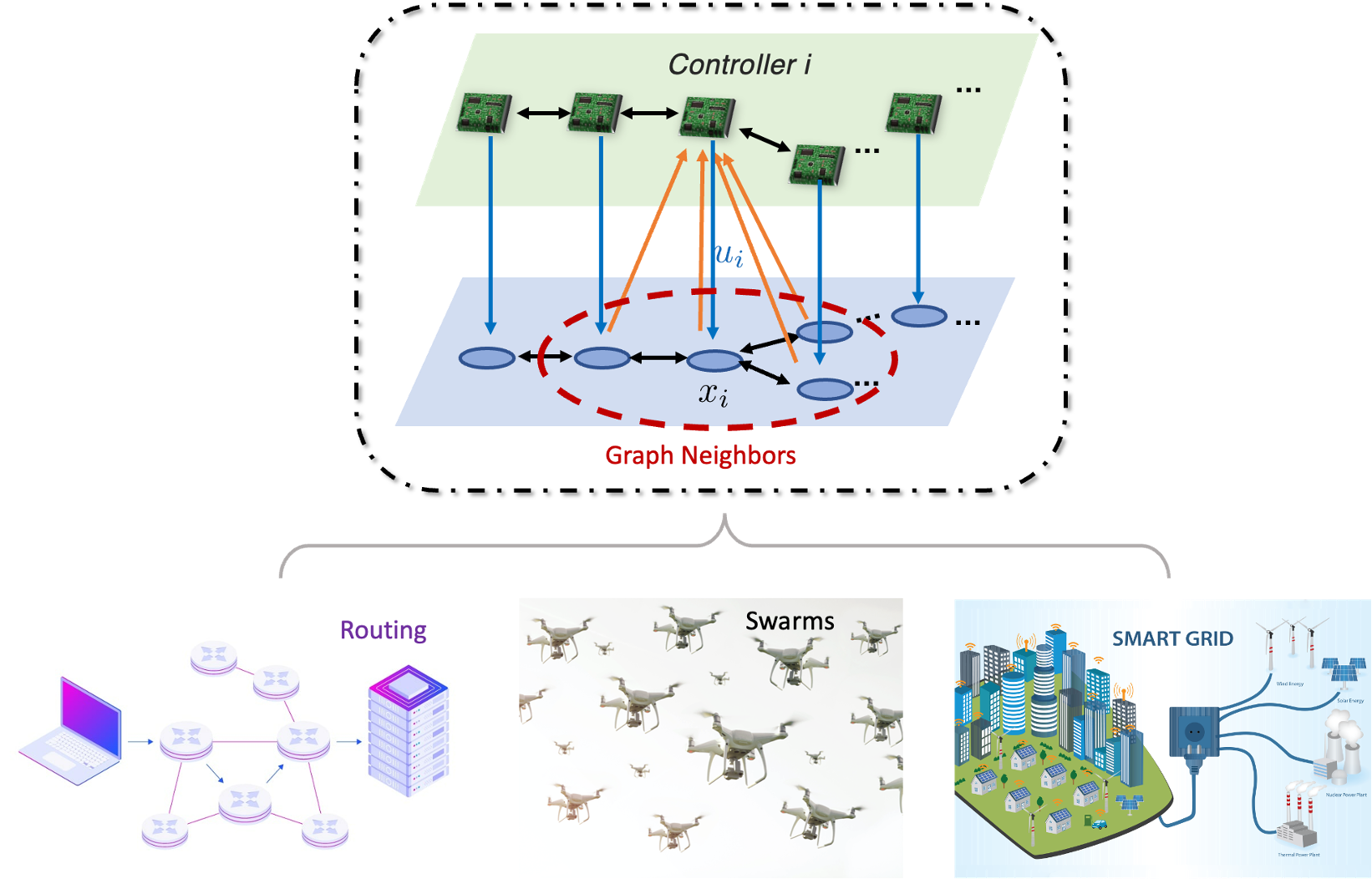

Limited by the sensing and communication capability, in many multi-agent systems, agents must decide their local actions based on local information. My research focuses on the distributed learning and control of network systems, which explores how multiple controllers, often geographically dispersed within a network, can collaboratively achieve global objectives by only sharing local information and relying on limited communication. By leveraging advanced techniques in control theory, optimization, and network theory, my research aims to enhance the efficiency and reliability of applications ranging from smart grids and automated transportation systems to large-scale industrial processes and sensor networks. Some specific topics that I am now particularly interested in includes

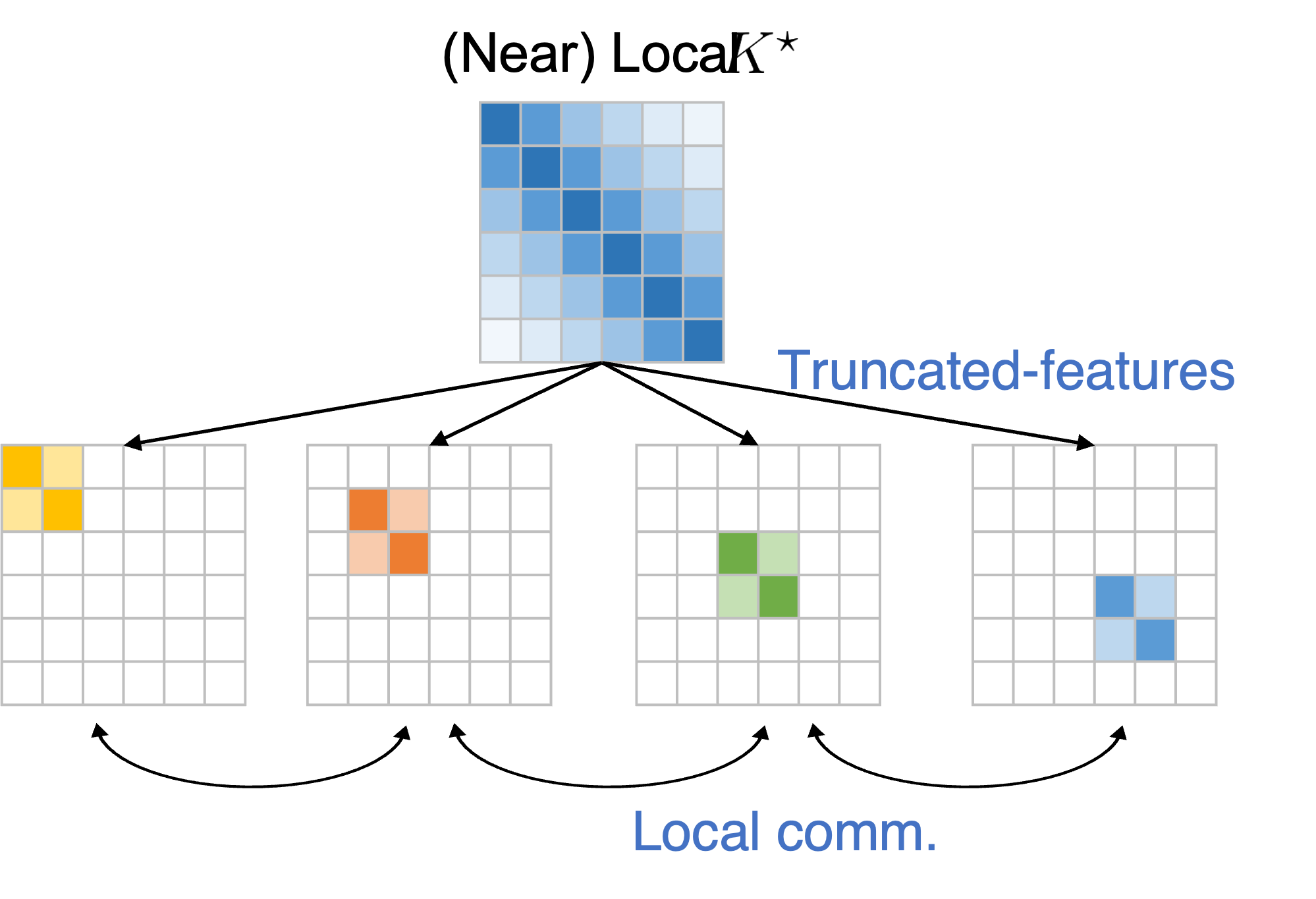

- Better theoretical understanding of the limitation and capability of distributed controller (e.g. what is the optimality gap between distributed local controllers and the optimal global controller?)

- Practical distributed control/learning algorithm design for network systems (e.g. robotic swarms, power system)

- Integrating control and learning (e.g. can we jointly learn a good communication and distributed control policy?)

Selected publications:

- Scalable Reinforcement Learning for Linear-Quadratic Control of Networks

Johan Olsson, Runyu Zhang, Emma Tegling, Na Li

American Control Conference (ACC), 2024 - On the Optimal Control of Network LQR with Spatially-exponential Decaying Structure

Runyu Zhang, Weiyu Li, Na Li

American Control Conference (ACC), 2023 (Preparing for Journal Submission)

- Multi-Agent Coverage Control with Transient Behavior Consideration

Runyu Zhang, Haitong Ma, Na Li

Learning for Dynamics and Control Conference (L4DC), 2024

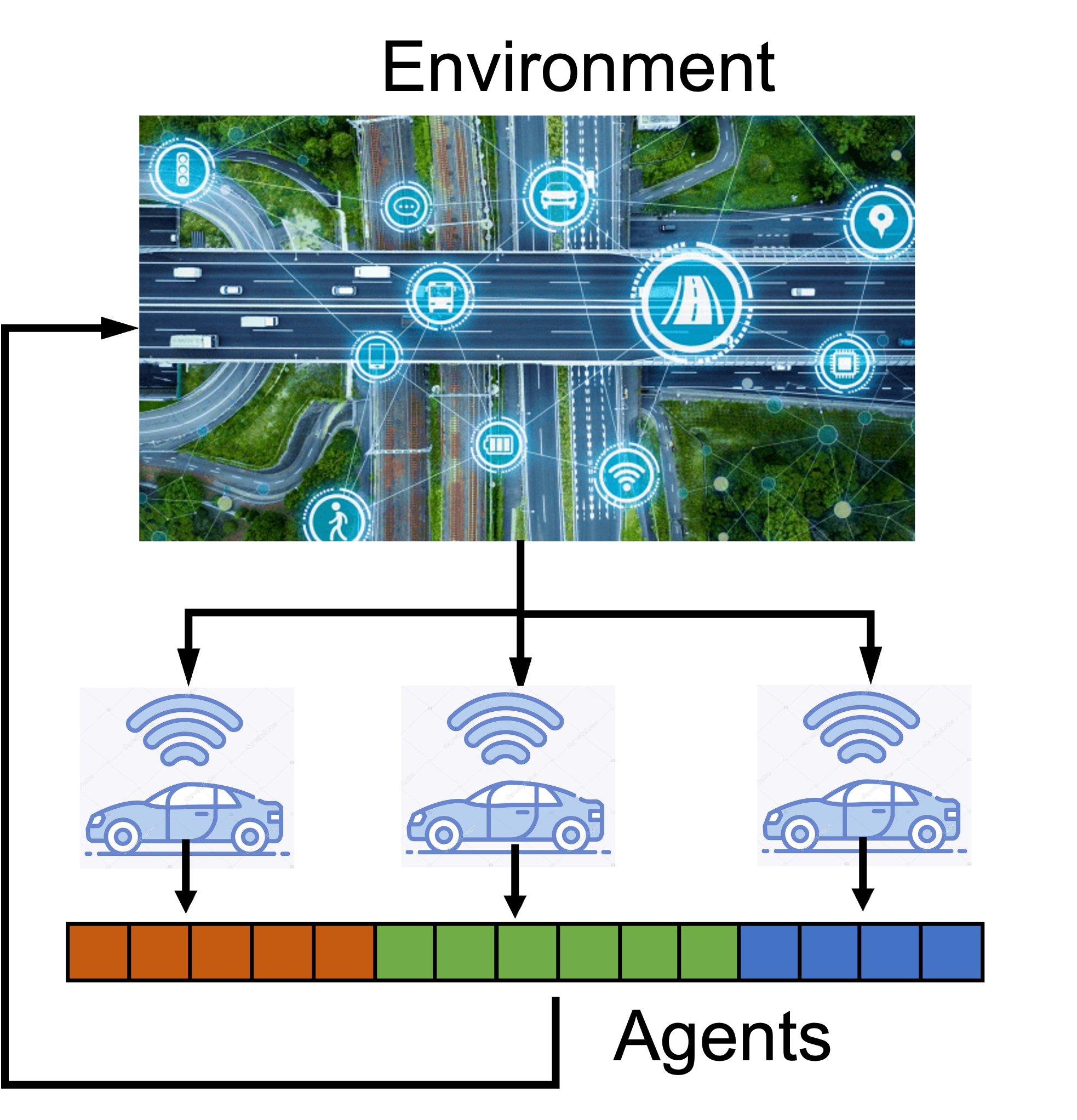

Topic 2: Multi-agent Reinforcement Learning

Unlike single-agent RL, multi-agent RL deals with multiple agents interacting in a shared, dynamic setting. This introduces unique challenges such as the need for coordination, dealing with non-stationary environments due to other learning agents, and handling the complexity of strategic interactions. Studying multi-agent RL is crucial for advancing our understanding of systems where autonomous agents must learn to coexist, compete, or collaborate, such as in autonomous vehicle fleets, and complex resource management scenarios. The key question that I try to answer is how to encourage collaborative behavior in the face of these above challenges.

Selected publications:

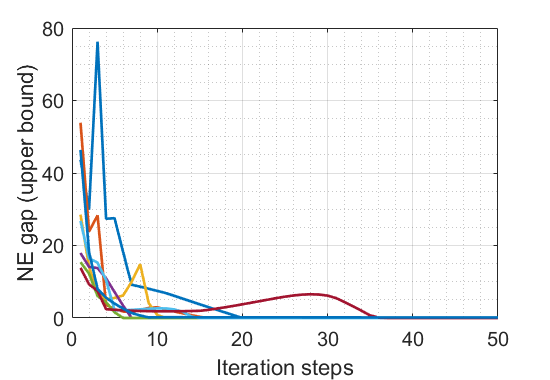

- Gradient Play in Stochastic Games: Stationary Points and Local Geometry

Runyu Zhang, Zhaolin Ren, Na Li

Transaction on Automatic Control (TAC), 2024 - On the Global Convergence Rates of Decentralized Softmax Gradient Play in Markov Potential Games

Runyu Zhang, Jincheng Mei, Bo Dai, Dale Schuurmans, Na Li

Advances in Neural Information Processing Systems (NeurIPS), 2022

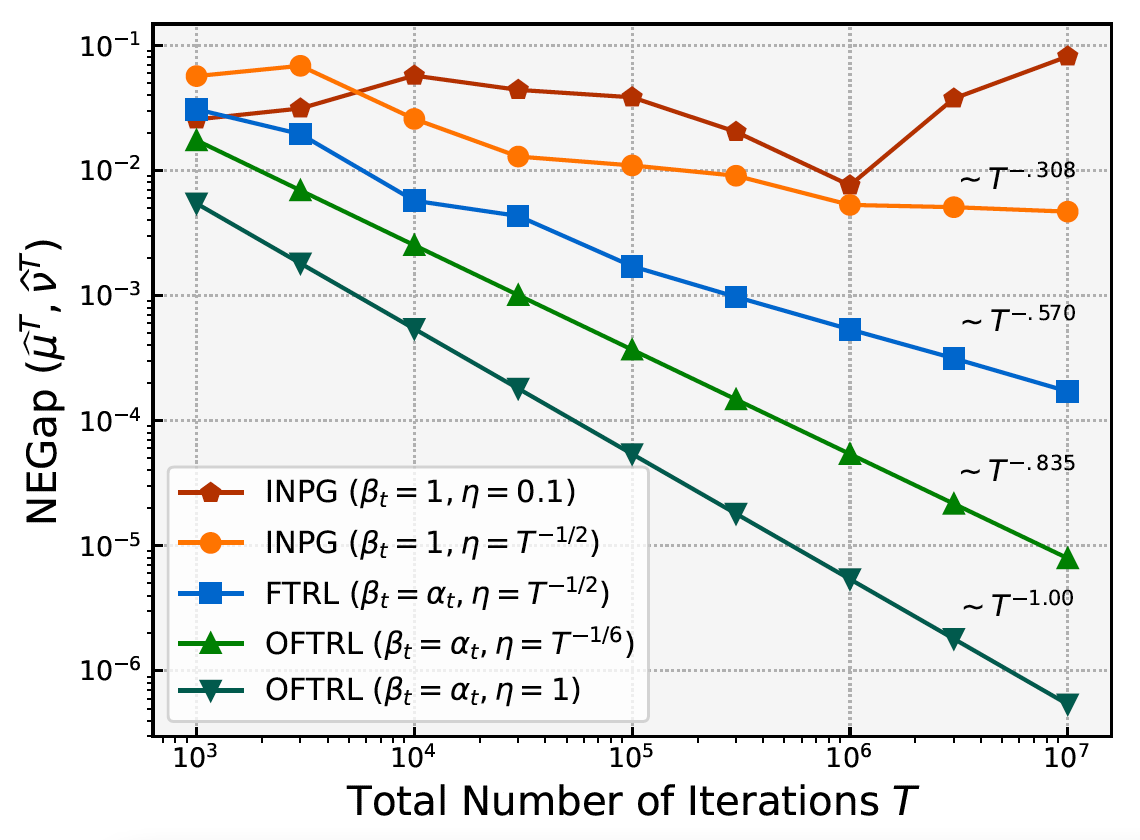

- Policy Optimization for Markov Games: Unified Framework and Faster Convergence

Runyu Zhang* , Qinghua Liu*, Huan Wang, Caiming Xiong, Na Li, Yu Bai

Advances in Neural Information Processing Systems (NeurIPS), 2022

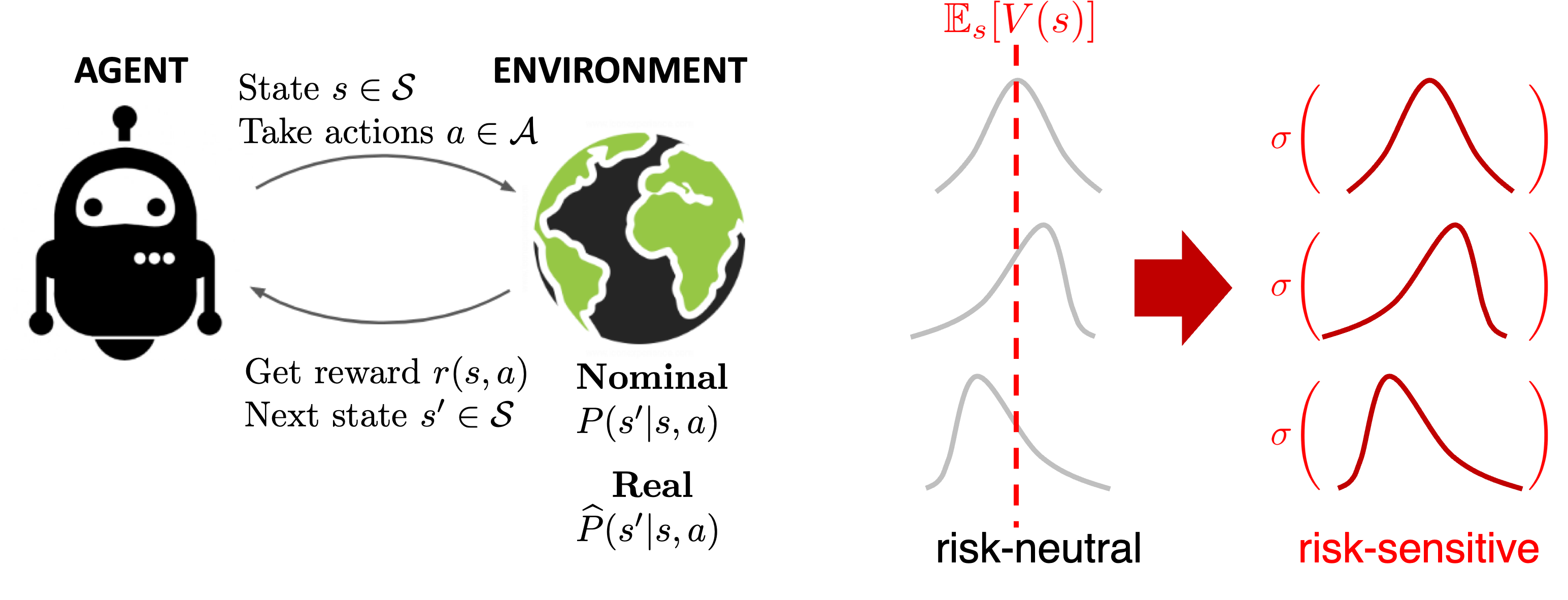

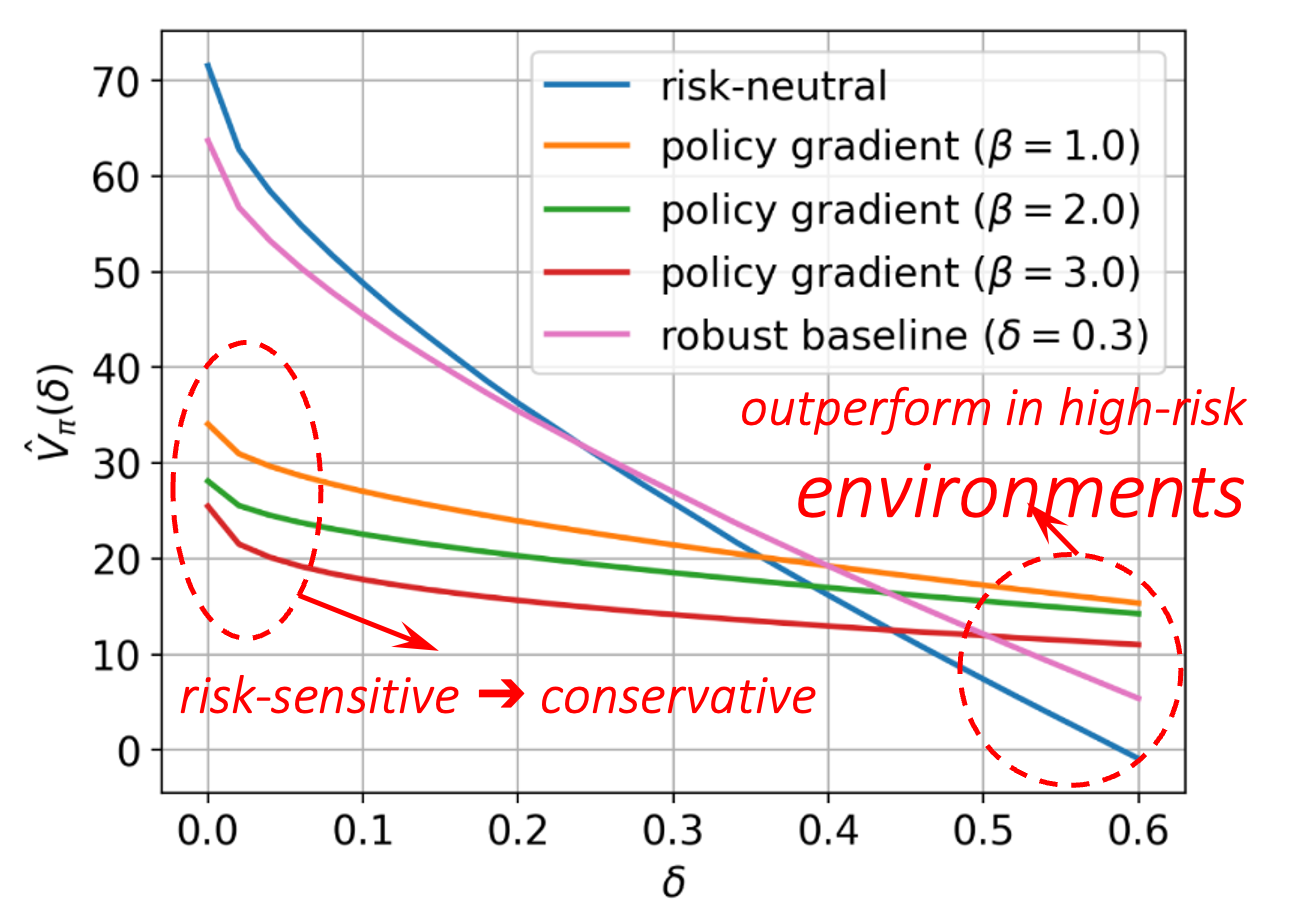

Topic 3: Robust, Risk-sensitive and Safe Reinforcement Learning

Robustness, risk-sensitivity and safety are desired properties for tasks such as online decision making and controlling dynamical systems, especially in the face of model uncertainty or estimation errors. I’m actively exploring the possibility of sample efficient practical algorithms that embody these desired properties. Further, I am also interested in extending these principles to multi-agent reinforcement learning (RL), where robustness is even more critical due to the added complexity of interactions among multiple agents.

Selected publications:

- Soft Robust MDPs and Risk-Sensitive MDPs: Equivalence, Policy Gradient, and Sample Complexity

Runyu Zhang, Hu Yang, Na Li

International Conference on Learning Representations (ICLR), 2024